Ensemble Learning

- Aryan

- May 17, 2025

- 8 min read

INTRODUCTION TO ENSEMBLE LEARNING

Ensemble word means a group of artists or something . Ensemble learning signifies a collection of multiple machine learning model . So when we combine different machine learning models and made a big model , this technique is called ensemble learning .

Wisdom of the Crowd

Wisdom of the crowd is the idea that a large group of people, each contributing their individual opinions or judgments, can often produce a result or decision that is more accurate or reliable than any single expert. This concept relies on the idea that collective intelligence, when aggregated properly, can correct for individual errors, biases, or limitations.

Example: Product Reviews on an E-commerce Website

Let’s take a product review scenario on an online shopping platform like Amazon. Scenario:

You're thinking of buying a new pair of wireless headphones.

You go to the product page and see 4,000 reviews.

The average rating is 4.4 out of 5 stars.

You read that some people love the sound quality, others mention issues with battery life.

A few reviews might be biased or fake, but most are real customers sharing their experiences.

How the "Wisdom of the Crowd" Works Here:

Even though:

Some people might give overly positive reviews.

Others might be too critical.

A few may have received defective units.

And a handful may not know how to use the product properly.

When you aggregate thousands of reviews, you get a clear and accurate picture of the product's quality.

This is the wisdom of the crowd in action:

Individual biases tend to cancel out.

Extreme opinions are diluted by the average.

The overall average rating (e.g., 4.4/5) is likely a very good estimate of the product’s actual quality.

Conditions That Make It Work

The wisdom of the crowd works well only when certain conditions are met:

When It Can Fail

If all reviewers are influenced by a viral negative post → no independence

If only fans or haters of the brand review the product → lack of diversity

If only a few people review it → not enough aggregation

If reviews are fake or manipulated → poor quality crowd

Summary

Wisdom of the crowd means the average opinion of many people is often more accurate than a single opinion, even an expert’s.

In the case of product reviews, this helps customers make informed decisions based on the collective experience of thousands.

It works best when people are diverse, independent, and their inputs are properly aggregated.

Core Idea of Ensemble Learning in Machine Learning

What is Ensemble Learning?

Ensemble Learning is a machine learning approach that combines the predictions of multiple models (called base learners) to make a final prediction. The idea is that a group of models, when aggregated intelligently, can perform better than any single model.

Core Idea

"Instead of relying on a single model, ensemble learning builds a collection of models and combines their outputs to produce better, more stable, and more accurate predictions."

This principle is based on the concept of the "Wisdom of the Crowd", which suggests that collective decisions made by a diverse group are often more accurate than decisions made by individuals.

Why Ensemble Learning Works

Error Reduction

Individual models may make different types of errors.

By combining their predictions, ensemble methods can average out these errors.

Bias-Variance Trade-off

Reduces variance by averaging predictions across models (e.g., in bagging).

Some techniques also reduce bias (e.g., boosting).

Robustness and Stability

Less sensitive to fluctuations in the training data or outliers.

Provides more consistent performance on unseen data.

Model Generalization

By leveraging multiple hypotheses, ensemble models tend to generalize better than single models.

Importance of Diversity

For an ensemble to outperform individual models, the base learners must be diverse, meaning they should make different types of errors. Otherwise, combining similar models adds no benefit.

There are three key strategies to create diversity:

1. Using Different Algorithms (Algorithmic Diversity)

Train models of different types (e.g., Decision Tree, SVM, Logistic Regression).

Each algorithm learns patterns differently, resulting in varied decision boundaries.

This is called a heterogeneous ensemble.

2. Using the Same Algorithm on Different Data (Data Diversity)

Train multiple instances of the same algorithm using different data subsets.

Each model sees a different sample or feature subset, creating varied learning experiences.

Techniques:

Random sampling (bagging)

Feature subset selection

Data partitioning

This is a homogeneous ensemble.

3. Combining Both (Hybrid Diversity)

Use different algorithms trained on different data subsets.

This creates maximum diversity in both learning strategies and exposure to data.

This is the most flexible and often the most powerful approach.

How Ensemble Models Make Predictions

Classification Example

Suppose we have a dataset with features like IQ and CGPA, and an output label: "Placement Status" (Placed / Not Placed).

We train an ensemble with five classification models.

Now, we receive a new student profile (a query point), and we want to predict placement status:

Model 1 → Placed

Model 2 → Placed

Model 3 → Not Placed

Model 4 → Placed

Model 5 → Not Placed

We aggregate the predictions using majority voting:

3 models voted "Placed"

2 models voted "Not Placed"

Final prediction: Placed, because the majority (3 out of 5) supports this outcome.

This is called hard voting (based on discrete class labels).

If models provide probabilities instead of hard labels, we can use soft voting (average probabilities).

Regression Example

Now suppose the task is predicting the LPA (Lakhs Per Annum) salary package based on IQ and CGPA.

We train an ensemble of five regression models.

We input a new student's data to all models in the ensemble. Each model gives its predicted LPA:

Model 1 → 5.2 LPA

Model 2 → 4.8 LPA

Model 3 → 5.0 LPA

Model 4 → 5.1 LPA

Model 5 → 4.9 LPA

We compute the mean prediction:

Final Prediction = = 5.0 LPA

Final output: 5.0 LPA

This method of averaging predictions is a standard regression ensemble approach.

Benefits of Ensemble Learning

Improved Accuracy: Generally better than single models.

Reduced Overfitting: Especially in high-variance models like decision trees.

Higher Generalization: Performs better on unseen data.

Robustness: Less sensitive to data noise and outliers.

Limitations and Challenges

Increased Complexity: More models mean more training and management.

Computational Cost: Requires more memory and processing time.

Interpretability: Harder to explain than a single model.

Diminishing Returns: Beyond a point, adding more models may not improve performance.

Final Summary

Ensemble Learning improves model performance by combining multiple diverse models to make a collective decision.

Diversity among base models is essential, and it can be introduced in three ways:

Using different algorithms (heterogeneous ensemble)

Using same algorithm on different data (homogeneous ensemble)

Combining both algorithm and data diversity (hybrid ensemble)

In classification, ensemble predictions are often based on majority vote.

In regression, predictions are typically averaged across all models.

This simple yet powerful idea has made ensemble learning a cornerstone of modern machine learning, especially in real-world applications like fraud detection, recommendation systems, and predictive modeling.

Types of Ensemble Learning

Voting Ensemble

What is it?

Voting combines predictions from multiple different models.

Used mainly for classification (but adaptable to regression).

Two types:

Hard Voting: Takes the majority class label.

Soft Voting: Averages probability scores and picks the most probable class.

Classification Example:

Imagine we’re predicting fruit types 🍎🍌🍇 using 3 models:

Sample | Logistic Regression | Decision Tree | SVM | Hard Voting Output |

X1 | 🍎 Apple | 🍌 Banana | 🍎 Apple | 🍎 Apple (majority wins) |

Soft Voting (Probabilities):

Class | Model 1 | Model 2 | Model 3 | Average |

🍎 | 0.7 | 0.4 | 0.8 | 0.63 |

🍌 | 0.3 | 0.6 | 0.2 | 0.37 |

Final Output: 🍎 Apple (highest average)

Regression Example:

Suppose you’re predicting house prices using:

Linear Regression → ₹250k

Decision Tree → ₹270k

SVR → ₹260k

Voting (Averaging):

Final prediction = (250 + 270 + 260) / 3 = ₹260k

Bagging (Bootstrap Aggregating)

What is it ?

Trains multiple instances of the same model on random bootstrapped samples of the data.

Models are trained independently in parallel.

Final prediction is averaged (regression) or by majority vote (classification).

Reduces variance (i.e., helps prevent overfitting).

Classification Example: Random Forest

You train 10 decision trees on different random samples.

Each tree gives a prediction for a flower class: 🌹🌻🌷

Suppose:

4 say 🌷

3 say 🌹

3 say 🌻

→ Output = 🌷 (majority)

Regression Example: Random Forest Regressor

Each tree predicts a price:

Tree 1: ₹500k

Tree 2: ₹520k

Tree 3: ₹510k

Final Output = Average = ₹510k

Boosting

What is it ?

Trains models sequentially.

Each new model focuses on correcting errors made by previous ones.

Turns weak learners into a strong learner.

Common boosting algorithms: AdaBoost, Gradient Boosting, XGBoost, LightGBM

Classification Example: AdaBoost

Let’s say:

Model 1 correctly classifies most data but misclassifies some.

The next model focuses on those misclassified points (by increasing their weights).

This repeats for multiple models.

Final prediction = weighted vote of all models.

Regression Example: Gradient Boosting Regressor

Step-by-step:

Model 1 predicts ₹600k, error = -50k

Model 2 predicts the error/residual (-50k), improves the result.

Model 3 predicts remaining error, and so on.

Final prediction = Base prediction + all corrections

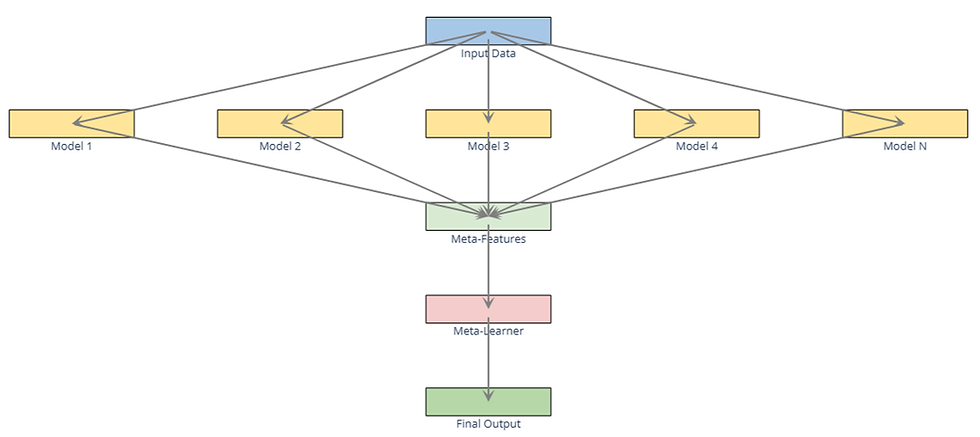

Stacking

Stacking, short for stacked generalization, is an ensemble learning technique that combines multiple machine learning models (called base learners) using a meta-learner (also called blender or level-1 model). It is typically more powerful than other ensemble methods like bagging or boosting because it learns how to best combine the predictions from different models.

Goal: Improve predictive performance by leveraging the strengths and compensating for the weaknesses of diverse models.

Key Idea: Instead of combining model predictions using simple rules (like voting or averaging), stacking learns how to combine them using another model.

Architecture of Stacking

Stacking generally involves two layers:

Level-0 (Base Learners):

A diverse set of models is trained on the training data.

Each model makes predictions independently.

Examples: Decision Trees, SVMs, k-NN, Logistic Regression, Neural Networks, etc.

Level-1 (Meta-Learner):

Takes the predictions of base learners as input features.

Learns to optimally combine those predictions to make the final prediction.

Common choices: Logistic Regression, Linear Regression, or even complex models like Gradient Boosting.

Stacking Workflow

Let’s consider a supervised learning problem (classification or regression).

Step-by-step Process:

Split the training data into K folds (for cross-validation).

Train each base model on K-1 folds, and use the remaining fold to predict. This ensures out-of-fold predictions to avoid data leakage.

Collect out-of-fold predictions from all base models — these become the training input for the meta-learner.

Train the meta-learner on these new features (predictions from base models).

Final Prediction:

For new/unseen data: Base models make predictions, and these predictions are passed to the meta-learner, which outputs the final result.

Visualization of Stacking

Advantages of Stacking

Combines diverse models: Can include both simple and complex models, linear and nonlinear.

Reduces generalization error: By capturing different aspects of the data.

No restriction on model type: Heterogeneous ensemble possible (unlike bagging/boosting which typically uses the same model type).

Flexible and powerful: Especially effective in competitions .

WHY IT WORKS

Let’s first discuss ensemble learning in the context of classification. As shown in the figure, we have a dataset consisting of three classes: triangles, squares, and circles. The black-colored points represent training data, while the green, orange, and blue points represent testing data.

We are using three different models (Model 1, Model 2, and Model 3), all trained on the same dataset. Each model learns a unique decision boundary based on its internal logic or structure, and as seen in the diagrams, their decision boundaries differ from one another — this shows that the models have different "opinions" on how to classify the data.

Now comes the role of ensemble learning. Instead of relying on a single model, we combine the predictions of all three models. In classification, ensemble methods often use a majority voting approach — meaning the class predicted by the majority of models is selected as the final prediction.

This combination results in a smoother and more accurate decision boundary, as it balances out the errors or biases of individual models. This is the core idea behind how ensemble learning improves performance in classification tasks.

Now let's discuss the case of regression .

As illustrated in the figure, suppose we have a dataset with several distinct regression lines. By calculating the average prediction across these individual models, we derive an ensemble line (shown as the dotted black line). This ensemble line often exhibits improved predictive performance compared to any single constituent model.