top of page

Deep Learning

Deep Learning Optimizers Explained: NAG, Adagrad, RMSProp, and Adam

Standard Gradient Descent is rarely enough for modern neural networks. In this guide, we trace the evolution of optimization algorithms—from the 'look-ahead' mechanism of Nesterov Accelerated Gradient to the adaptive learning rates of Adagrad and RMSProp. Finally, we demystify Adam to understand why it combines the best of both worlds.

Aryan

2 days ago

The Complete Intuition Behind CNNs: How the Human Visual Cortex Inspired Convolutional Neural Networks

Convolutional Neural Networks (CNNs) are inspired by how our visual cortex understands shapes, edges, and patterns. This blog explains CNNs with simple intuition, real experiments like the Hubel & Wiesel cat study, the evolution from the Neocognitron to modern deep learning models, and practical applications in computer vision.

Aryan

Dec 31, 2025

Mastering Momentum Optimization: Visualizing Loss Landscapes & Escaping Local Minima

In the rugged landscape of Deep Learning loss functions, standard Gradient Descent often struggles with local minima, saddle points, and the infamous "zig-zag" path. This article breaks down the geometry of loss landscapes—from 2D curves to 3D contours—and explains how Momentum Optimization acts as a confident driver. Learn how using a simple velocity term and the "moving average" of past gradients can significantly accelerate model convergence and smooth out noisy training p

Aryan

Dec 26, 2025

Exponential Weighted Moving Average (EWMA): Theory, Formula, Example & Intuition

Exponential Weighted Moving Average (EWMA) is a core technique used to smooth noisy time-series data and track trends. In this post, we break down the intuition, mathematical formulation, step-by-step example, and proof behind EWMA — including why it plays a crucial role in optimizers like Adam and RMSProp.

Aryan

Dec 22, 2025

Optimizers in Deep Learning: Role of Gradient Descent, Types, and Key Challenges

Training a neural network is fundamentally an optimization problem. This blog explains the role of optimizers in deep learning, how gradient descent works, its batch, stochastic, and mini-batch variants, and why challenges like learning rate sensitivity, local minima, and saddle points motivate advanced optimization techniques.

Aryan

Dec 20, 2025

Batch Normalization Explained: Theory, Intuition, and How It Stabilizes Deep Neural Network Training

Batch Normalization is a powerful technique that stabilizes and accelerates the training of deep neural networks by normalizing layer activations. This article explains the intuition behind Batch Normalization, internal covariate shift, the step-by-step algorithm, and why BN improves convergence, gradient flow, and overall training stability.

Aryan

Dec 18, 2025

Why Weight Initialization Is Important in Deep Learning (Xavier vs He Explained)

Weight initialization plays a critical role in training deep neural networks. Poor initialization can lead to vanishing or exploding gradients, symmetry issues, and slow convergence. In this article, we explore why common methods like zero, constant, and naive random initialization fail, and how principled approaches like Xavier (Glorot) and He initialization maintain stable signal flow and enable effective deep learning.

Aryan

Dec 13, 2025

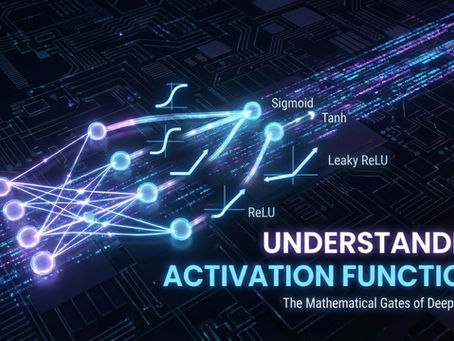

Activation Functions in Neural Networks: Complete Guide to Sigmoid, Tanh, ReLU & Their Variants

Activation functions give neural networks the power to learn non-linear patterns. This guide breaks down Sigmoid, Tanh, ReLU, and modern variants like Leaky ReLU, ELU, and SELU—explaining how they work, why they matter, and how they impact training performance.

Aryan

Dec 10, 2025

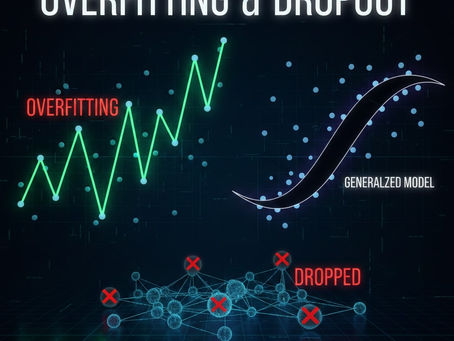

Dropout in Neural Networks: The Complete Guide to Solving Overfitting

Overfitting occurs when a neural network memorizes training data instead of learning real patterns. This guide explains how Dropout works, why it is effective, and how to tune it to build robust models.

Aryan

Dec 5, 2025

The Vanishing Gradient Problem & How to Optimize Neural Network Performance

This blog explains the Vanishing Gradient Problem in deep neural networks—why gradients shrink, how it stops learning, and proven fixes like ReLU, BatchNorm, and Residual Networks. It also covers essential strategies to improve neural network performance, including hyperparameter tuning, architecture optimization, and troubleshooting common training issues.

Aryan

Nov 28, 2025

Backpropagation in Neural Networks: Complete Intuition, Math, and Step-by-Step Explanation

Backpropagation is the core algorithm that trains neural networks by adjusting weights and biases to minimize error. This guide explains the intuition, math, chain rule, and real-world examples—making it easy to understand how neural networks actually learn.

Aryan

Nov 24, 2025

Loss Functions in Deep Learning: A Complete Guide to MSE, MAE, Cross-Entropy & More

Loss functions are the backbone of every neural network — they tell the model how wrong it is and how to improve.

This guide breaks down key loss functions like MSE, MAE, Huber, Binary Cross-Entropy, and Categorical Cross-Entropy — with formulas, intuition, and use cases.

Understand how loss drives learning through forward and backward propagation and why choosing the right one is crucial for better model performance.

Aryan

Nov 6, 2025

What is an MLP? Complete Guide to Multi-Layer Perceptrons in Neural Networks

The Multi-Layer Perceptron (MLP) is the foundation of modern neural networks — the model that gave rise to deep learning itself.

In this complete guide, we break down the architecture, intuition, and mathematics behind MLPs. You’ll learn how multiple perceptrons, when stacked in layers with activation functions, can model complex non-linear relationships and make intelligent predictions.

Aryan

Nov 3, 2025

bottom of page