Gradient Boosting For Regression - 1

- Aryan

- May 29, 2025

- 6 min read

Updated: Jun 8, 2025

Boosting

Boosting is an ensemble learning technique in machine learning used to create a strong model by combining multiple weak models. A weak model is one that performs only slightly better than random guessing (like a shallow decision tree). The main idea of boosting is to improve overall performance by sequentially training each new model to correct the mistakes made by the previous ones.

Quick Comparison with Bagging:

Let’s first understand how Bagging works so we can see how Boosting is different.

Bagging (Bootstrap Aggregating):

In bagging, we take multiple base models (e.g.,d1,d2,d3 ) and apply bootstrapping to our dataset.

Bootstrapping means creating multiple random samples with replacement from the original dataset.

Each model is trained on a different bootstrap sample.

When we get a new input (query point), we ask all models to make a prediction. Their outputs are then aggregated using methods like majority voting (for classification) or averaging (for regression).

Bagging is used when we have models that are low bias but high variance, like deep decision trees. These models tend to overfit, but bagging reduces their variance by averaging.

The philosophy behind bagging is the “wisdom of the crowd”—many diverse opinions averaged together tend to be more accurate.

Boosting – How It’s Different

Now let’s break down how Boosting differs from Bagging in three key ways:

1. Training Order:

Bagging trains all models in parallel, independently of each other.

Boosting trains models sequentially—each model is trained after the previous one.

2. Data Usage:

In bagging, each model gets a different random subset of the data.

In boosting, all models usually use the same dataset, but the way they treat each data point is different.

We assign weights or importance to each data point.

If a data point was misclassified by the previous model, its weight is increased so that the next model focuses more on it.

3. Type of Base Models:

Bagging prefers complex models that tend to overfit (high variance), like large decision trees.

Boosting uses simple models with high bias but low variance, like shallow decision trees (called decision stumps).

These simple models may perform poorly on training data but can generalize well when properly combined.

Core Idea of Boosting

Here’s how boosting works step by step:

Start by assigning equal weights to all training examples.

Train the first model (m1) on the dataset.

m1 will make some mistakes — it may classify some data points incorrectly.

Increase the weights (importance) of the misclassified points.

This makes the next model (m2) focus more on the hard examples that m1 got wrong.

Train the second model (m2) on the same dataset, but now it focuses more on the difficult points.

m2 tries to correct the errors of m1.

Again, update weights: increase them for the points that m2 got wrong.

Train a third model (m3) to correct the mistakes of m2 .

This process is repeated for several rounds.

In the end, we combine the predictions of all models using a weighted vote (for classification) or weighted sum (for regression).

What Happens to Bias and Variance ?

Initially, we use high bias models (like small trees), which may underfit.

As boosting goes on, each model reduces the error of the previous one, so the bias gradually decreases.

Because each model is simple and we’re not using random sampling like bagging, the variance stays low.

Eventually, boosting creates a model that has low bias and low variance, which leads to high accuracy.

Summary (Sticky Points):

Boosting = sequential correction of errors.

Same dataset used each round, but with adjusted weights.

Focuses on bias reduction.

Uses high bias, low variance models.

Final model is a weighted combination of all weak models.

Converts weak learners into a strong learner.

What is Gradient Boosting ?

Gradient Boosting is an ensemble learning technique used in machine learning that builds a strong predictive model by combining several weak learners (typically decision trees). It works by adding models one by one, where each new model is trained to correct the mistakes of the combined previous models.

Works for regression, classification, and even ranking tasks.

Very effective when dealing with complex, non-linear datasets.

Performs well with minimal hyperparameter tuning.

Available in major libraries like scikit-learn, XGBoost, LightGBM, CatBoost.

Concept of Additive Modeling

Boosting uses the idea of additive modeling, where we build the final function by adding many small simple models step by step.

Real-Life Analogy:

Imagine trying to guess the equation of a complex graph (like the one shown below):

This complex graph might look hard at first. But suppose you realize it's a combination of two simple functions:

y = x

y = sin(x)

So, the complex curve could be:

y = x + sin(x)

That’s additive modeling: build complex functions by adding simpler ones.

Boosting follows the same approach — instead of solving everything in one shot, it solves small parts and adds them together.

What Does “Gradient” Mean Here?

The word “Gradient” comes from gradient descent — a mathematical method used to reduce error step by step.

In gradient boosting:

We compute the gradient of the loss function (i.e., direction of error).

Then train the new weak model to move in the direction that reduces error.

It’s like going downhill slowly to find the best path.

So, gradient boosting = Additive Modeling + Gradient Descent.

Final Sticky Notes

Gradient Boosting builds a model step-by-step.

Uses additive modeling: final model = sum of small models.

Each model learns from mistakes of the previous one .

Uses gradient descent to reduce error.

Turns weak learners → strong model.

Works well even with minimal tuning.

Supports regression, classification, ranking .

HOW IT WORKS ?

Gradient Boosting Algorithm: Conceptual Overview

Gradient Boosting is a machine learning technique used for regression and classification tasks. It builds a strong predictive model by combining multiple weak learners, typically decision trees, in a sequential manner.

The main goal of gradient boosting is to find a mathematical function that maps the input features (x) to the output target (y) — that is, to model the relationship y = f(x).

Gradient Boosting breaks down this complex function f(x) into a sum of simpler functions:

This is known as additive modeling.

Since we have three rows in our data:

R&D | Ops | Marketing | Profit |

165 | 137 | 472 | 192 |

101 | 92 | 250 | 144 |

29 | 127 | 201 | 91 |

Using Our Data:

From the table:

R&D | Ops | Marketing | Profit (y) | f_0(x) |

165 | 137 | 472 | 192 | 142.33 |

101 | 92 | 250 | 144 | 142.33 |

29 | 127 | 201 | 91 | 142.33 |

Terminal Region in Decision Trees

1. Introduction to Decision Trees

Decision trees are a type of supervised learning algorithm primarily used for classification and regression tasks. They work by recursively partitioning the data based on feature values, forming a tree-like structure.

A typical decision tree consists of:

Nodes: These represent decision points based on a particular feature.

Leaf Nodes (or Terminal Nodes): These are the final nodes of the tree, representing the output or prediction. Data reaching a leaf node is assigned to that node's outcome.

2. Understanding Terminal Regions in Regression Trees

In the context of regression trees, the concept of a "terminal region" is crucial. When a decision tree is used for regression, its primary goal is to predict a continuous output variable (Y) based on input features (X). The tree achieves this by dividing the input space (the range of X values) into distinct, non-overlapping regions.

Each of these final, undivided regions corresponds directly to a leaf node of the decision tree. These are the "terminal regions." Within each terminal region, the regression tree predicts a constant value (typically the mean or median of the output variable for all training data points falling into that region).

Consider our regression data:

The tree will apply a series of splits (vertical lines in a 1D feature space) to partition the data.

Each split aims to minimize the error (e.g., sum of squared errors) within the resulting regions.

The final, undivided segments of the input space are the terminal regions.

As shown in Image , our tree divides the regression data's input space (X) into three distinct segments, labeled 1, 2, and 3. These three segments are precisely what we refer to as terminal regions. For any new data point that falls into Region 1, Region 2, or Region 3, the regression tree will output a specific predicted value associated with that particular terminal region.

Key Insight:

Each tree tries to correct the residual (error) from the previous model.

Over iterations, the model gets better at approximating the true function.

Final output is initial prediction + sum of all tree outputs.

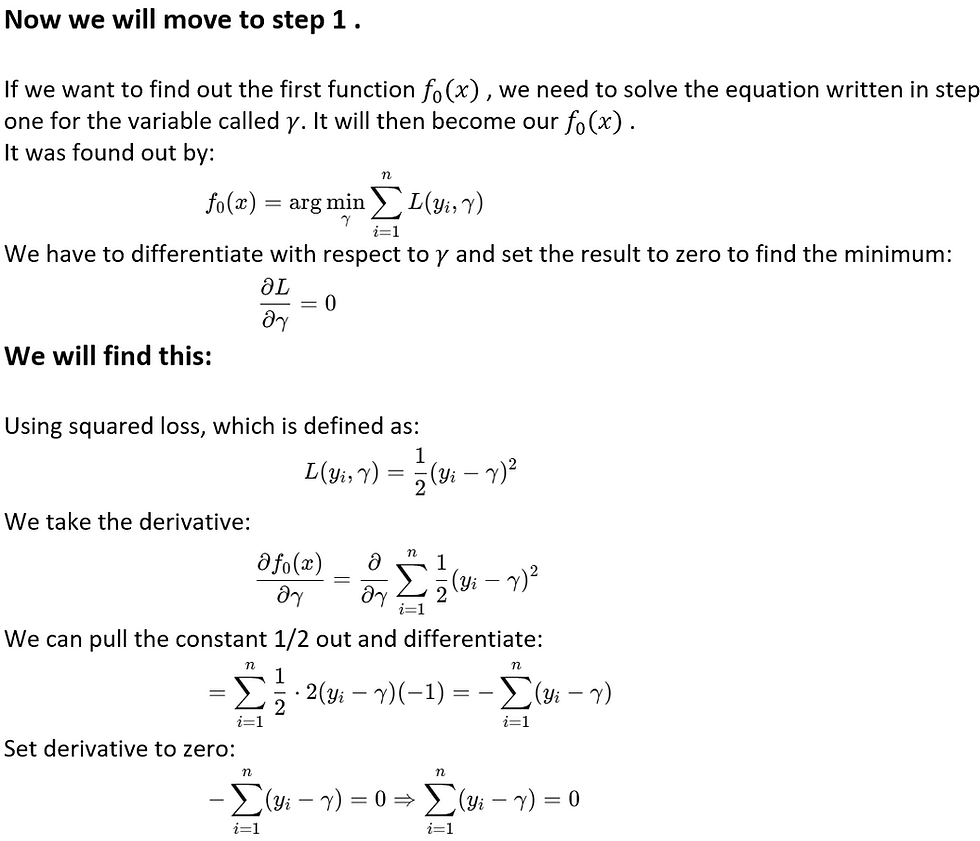

Gradient Boosting for Regression

If we are using Gradient Boosting for regression, we begin with input features and an output column (target values). The core idea is to train a series of models sequentially, where each new model tries to correct the errors made by the previous ones.

We keep adding new decision trees in a stage-wise manner, where each tree tries to fix the residual errors from the previous ensemble. This approach helps build a strong model from multiple weak learners, increasing accuracy and performance over time.