Lasso Regression

- Aryan

- Feb 12, 2025

- 2 min read

What is Lasso Regression?

Lasso Regression (Least Absolute Shrinkage and Selection Operator) is a type of linear regression that includes an L1 regularization term to prevent overfitting and handle multicollinearity. It modifies the Ordinary Least Squares (OLS) cost function by adding a penalty term that shrinks some coefficients to zero, enabling feature selection.

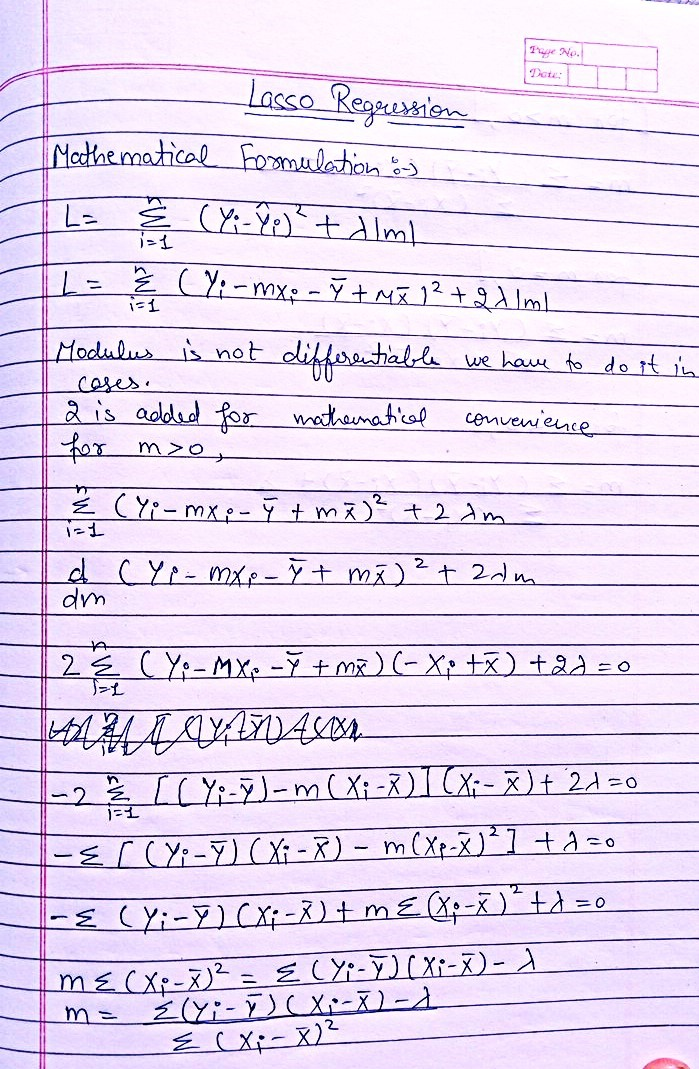

Lasso Regression Cost Function

In standard linear regression, the cost function is:

In Lasso Regression, an L1 penalty term is added:

where:

λ (lambda) is the regularization parameter, controlling the penalty strength.

∑ |w| is the sum of absolute values of coefficients (L1 norm).

If λ = 0, Lasso Regression behaves like OLS.

If λ is large, some coefficients become exactly zero, performing feature selection.

When to Use Lasso Regression?

When predictors are highly correlated (multicollinearity).

When you want to perform feature selection by eliminating less important variables.

When the model suffers from high variance (overfitting) and needs regularization.

Understanding Lasso Regression

How the coefficients get affected?

Lasso Regression shrinks some coefficients to exactly zero, effectively removing less important features from the model.

How large values of coefficients are affected?

Large coefficients are penalized heavily because Lasso adds their absolute values to the loss function.

This ensures that only the most significant predictors remain in the model.

Why is it called Lasso Regression?

The name "Lasso" comes from its ability to lasso (select) important features while shrinking others to zero.

It helps in feature selection, unlike Ridge Regression, which keeps all features with reduced magnitudes.

Lasso Regression: How It Shrinks and Eliminates Coefficients

Case: m > 0 (Slope is Positive)

When the slope (m) is positive, the numerator in the equation will also be positive.

If we increase the value of the regularization parameter (λ), it will decrease the numerator.

At a certain threshold, when the numerator becomes zero, the coefficient mmmwill also become zero.

However, once mmmis zero, a different equation is applied, which prevents it from going negative.

Key Takeaway:

As λ increases, m gradually reduces to zero but does not become negative after reaching zero.

Case: m < 0 (Slope is Negative)

When m is negative, a different equation governs its behavior.

As λ increases, the numerator (which is also negative) starts moving toward zero.

At a certain point, when λ is sufficiently large, m reaches exactly zero.

However, after reaching zero, the equation follows a different rule, preventing mmmfrom becoming positive again.

Key Takeaway:

Negative coefficients are pushed toward zero as λ increases and are set to zero at a certain threshold.

After reaching zero, they are not allowed to become positive again.

Case: m = 0 (Slope is Already Zero)

If m is already zero, the equation behaves just like standard linear regression.

The coefficient will remain zero unless there is a strong reason for it to become non-zero (i.e., very low λ).

Key Takeaway:

If a coefficient is zero, it will stay zero unless regularization strength is very low.

Mathematical Formulation